Discord Tested Age Verification Vendor Persona: What Users Should Know

Categories: Age Verification, Data Privacy, Digital Footprint, Digital ID, Discord, Social Media

- Days after backlash to its global age verification rollout, Discord quietly tested a second vendor, Persona, for some UK users.

- Discord’s support documentation (since updated and archived) disclosed that Persona processed user data server-side and retained it for up to 7 days before deletion.

- This differs from k-ID, Discord’s primary partner, which emphasizes on-device facial age estimation and states that no facial images or ID documents are stored.

- Persona’s broader product suite includes risk reports (Email, Phone, Adverse Media, and Social Media profiling), though there is no evidence Discord used anything beyond age verification.

- Persona is backed by Founders Fund (co-founded by Peter Thiel), but there is no evidence of operational overlap with Palantir or access to Discord user data.

- The episode highlights vendor transparency gaps, unanswered questions around manual verification flows, and ongoing behavioral “age inference” profiling on Discord.

Days after Discord’s global age verification announcement sparked user backlash, a surge in deletion requests, and a hasty clarification, another development has emerged that further complicates the platform’s privacy story: Discord tested a second age verification vendor: Persona.

Persona has a drastically different data handling approach than the one it has been publicly promoting (k-ID), and may pose an elevated risk to user privacy and data autonomy.

What We Know About Discord’s Persona Experiment

As first reported by PC Gamer, some UK users have been encountering age verification prompts processed by a company called Persona, rather than k-ID; the vendor Discord has publicly positioned as its age assurance partner.

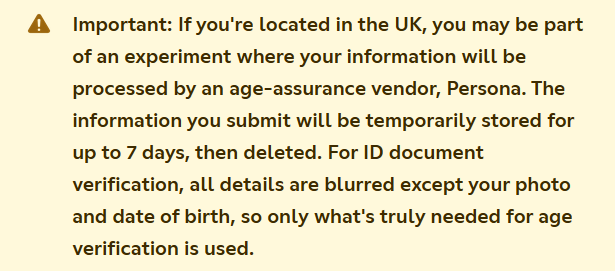

Discord’s own support documentation now includes a disclaimer for UK users stating they “may be part of an experiment where your information will be processed by an age-assurance vendor, Persona.” The notice adds that “the information you submit will be temporarily stored for up to 7 days, then deleted.”

Note – the relevant Discord support article has been updated, removing this disclaimer. The page has been archived, so you can verify this for yourself.

This is a notable departure from the privacy assurances Discord has been making. When announcing its global rollout, Discord emphasized that facial age estimation video selfies “never leave your device” and that ID documents are “deleted quickly” by its vendor partners.

The Persona experiment introduces a different model: server-side processing with explicit multi-day retention. When asked for comment by Kotaku, Discord stated that its work with Persona was part of a “limited test” that has “since been concluded.”

k-ID vs. Persona: Key Privacy Differences

The distinction between these two vendors matters for anyone concerned about how their biometric and identity data is handled.

k-ID, Discord’s primary partner, emphasizes on-device processing as a core feature. According to k-ID’s documentation, facial age estimation is processed locally and “no facial images leave the device.” k-ID states it does not store ID documents, facial scans, or biometric data from the verification process; only the outcome (adult or teen classification) is retained.

Persona operates differently. As a server-side identity verification platform, Persona processes data on its infrastructure rather than on users’ devices. According to Persona’s privacy documentation, retention periods are controlled by its business customers. For the Discord experiment specifically, Discord’s support documentation indicated a 7-day retention window before deletion. For users who were relying on Discord’s assurances about k-ID’s “never leaves your device” approach, discovering that some users’ data was being processed through a different vendor with different retention policies is understandably concerning.

The Investor Connection

The Persona experiment has drawn additional scrutiny because of the company’s investor profile. Founders Fund is a venture capital firm co-founded by Peter Thiel, and it’s been a lead investor in Persona’s funding rounds.

As documented in press releases, Founders Fund led a $150 million Series C funding round for Persona in September 2021, valuing the company at $1.5 billion. In April 2025, Founders Fund co-led Persona’s $200 million Series D round alongside Ribbit Capital, bringing the company’s valuation to $2 billion. Thiel is also a co-founder of Palantir Technologies, the data analytics company that has drawn criticism for its work.

The Electronic Frontier Foundation, which has been critical of Discord’s age verification approach, has previously documented concerns about Palantir’s surveillance practices.

To be clear: Persona and Palantir are entirely separate companies with no shared operations or data access. Founders Fund is a venture capital firm that invests in hundreds of companies across many sectors; being in a VC’s portfolio does not imply any operational relationship between portfolio companies, nor does it give the VC firm access to customer data. There is no evidence that Thiel personally has any involvement in Persona’s day-to-day operations or any access to data processed through Persona’s platform.

However, for users already skeptical of how their biometric data is being handled—particularly following October’s breach exposing approximately 70,000 government IDs; this investor profile adds another data point to their risk calculus.

Persona’s Broader Product Suite: Social Media Profiling

Beyond age verification, Persona offers its business customers a suite of tools that provide additional context about the company’s capabilities.

According to Persona’s own product page, the company offers a “Social Media Report” that allows businesses to “evaluate social media signals to better assess risk” and “get a more comprehensive picture of users’ potential risk by factoring in their social media profiles.” Persona’s documentation describes this as part of a broader “Reports” system that can aggregate “multiple first-party and third-party data sources” to build user risk profiles.

Other report types include Email Risk, Phone Risk, and Adverse Media lookups. According to Persona’s privacy policy, the company may collect “publicly available data, including data from governmental public records, the public internet and social media.”

To be clear: there is no evidence that Discord is using, or has ever used, Persona’s Social Media Report feature. Discord’s Persona experiment appears to have been limited to age verification. We are not suggesting Discord or Persona have done anything improper with social media data.

However, the existence of these capabilities within Persona’s product suite is relevant context for users thinking about identity verification ecosystems more broadly. When a vendor’s core business includes both identity verification and social media risk profiling, users may reasonably consider what data correlation could be possible – even if there’s no evidence it’s occurring in this case.

For folks already concerned about Discord’s behavioral profiling via its “age inference model,” understanding the full scope of capabilities offered by vendors in this space adds another dimension to their privacy calculus.

What This Means for Discord Users

Discord says the Persona experiment has concluded. But the episode raises questions worth considering:

- Vendor transparency: Discord’s privacy assurances have centered on specific vendor practices; particularly k-ID’s on-device processing. If Discord tests other vendors with different data handling models, users may not know which vendor is actually processing their data at any given time.

- The manual fallback process: As we’ve covered previously, users who fail automated age verification may be routed into manual appeal processes. October’s breach occurred in precisely these manual flows. Discord hasn’t publicly detailed which vendors or processes handle appeals, or what retention policies apply.

- Behavioral profiling continues: Regardless of which vendor handles explicit verification, Discord’s “age inference model” continues to analyze user behavior to estimate age. Whether you verify via k-ID, Persona, or avoid explicit verification entirely, your activity on the platform is being analyzed.

What You Can Do

Our advice from previous posts remains relevant:

- If you must verify, choose the on-device face scan. This avoids sending your government ID to third-party vendors entirely. Given the ongoing questions about which vendors are actually handling data and how, minimizing document submission is the safer approach.

- Push back on manual verification requests. If automated verification fails and you’re prompted to submit documents via a support channel, request an alternative. The manual process was the vulnerability exploited in October’s breach.

- Minimize your digital footprint. Regardless of which vendors handle age verification, reducing the amount of data that exists on your accounts (message history, old posts, behavioral patterns) limits your exposure in future breaches and reduces the data available for any profiling. Redact lets you bulk delete Discord messages, filter by date or keyword, and set up recurring cleanups across 25+ platforms.

- Stay informed. Discord’s age verification approach continues to evolve. What they say publicly and what they test quietly may differ.

The Bottom Line

Discord’s Persona experiment may have been limited in scope and has since concluded. But the episode illustrates a broader point: the gap between Discord’s public privacy assurances and the complexity of how user data actually flows through its vendor ecosystem.

When Discord tells users their video selfies “never leave the device,” that applies to k-ID’s flow; but apparently not to every vendor Discord tests. When Discord emphasizes “quick deletion,” that doesn’t necessarily mean the same thing across all partners or experiments.

For users trying to make informed decisions about their privacy, the existence of these experiments (and the different data handling models involved) is relevant information. For users, the practical lesson is straightforward: privacy assurances are only as strong as the systems implementing them. Control what you can control; which means being thoughtful about the data you leave on any platform in the first place.

Related Reading:

- Discord Bulk Deletions Surge 350% After Global ID Verification Announcement

- Discord Says Most Users Won’t Need to Verify Their Age. Here’s What They’re Not Telling You.

- Discord’s Global Age Verification Rollout: What It Means for Your Privacy

- 70,000+ Government IDs Leaked in Discord x Zendesk Breach