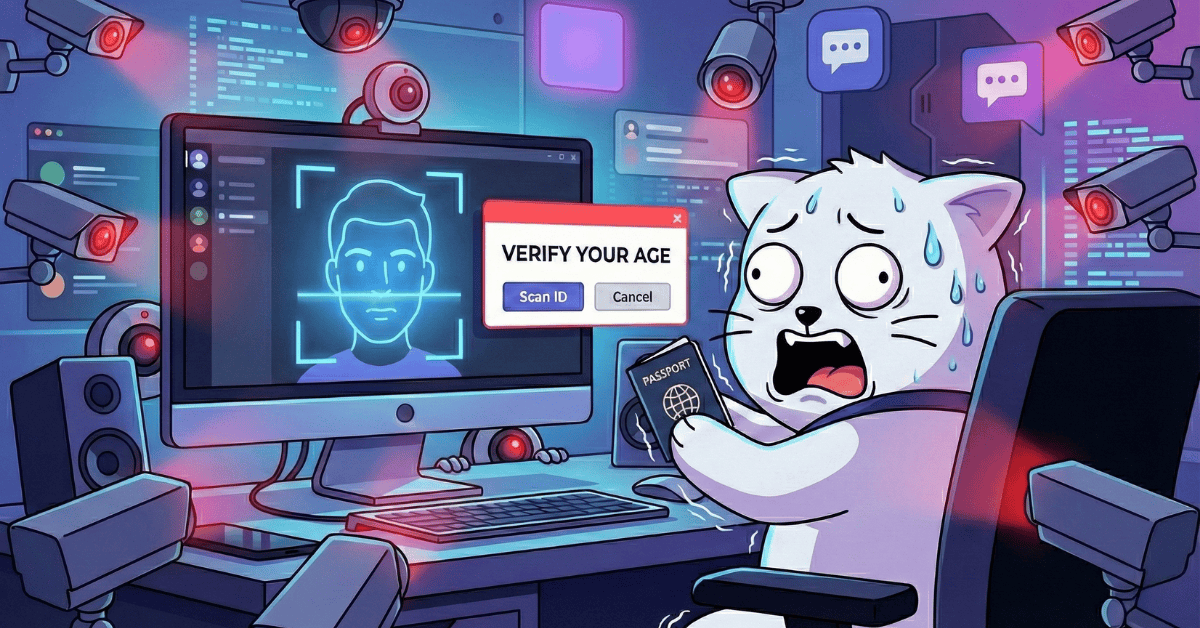

Discord Says Most Users Won’t Need to Verify Their Age – Here’s What They’re Not Telling You

Categories: Age Verification, Data Breach, Digital ID, Discord, Policy, Uncategorized

- After backlash to its global age verification announcement, Discord clarified that most users will not need to upload ID or complete a face scan.

- Instead, Discord relies on a background “age inference model” that profiles user behavior to classify accounts as adult or teen.

- The model analyzes signals like account tenure, device type, activity patterns, and game metadata; excluding message content but not overall usage.

- Discord frames this as convenience, but it amounts to continuous behavioral profiling applied to the entire user base.

- Users the model can’t confidently classify are funneled toward facial age estimation or government ID submission via third-party vendors.

- Given Discord’s recent data expansion and prior ID-verification breach, critics argue the clarification downplays real privacy and security risks.

After widespread backlash to Discord’s global age verification announcement, they published a clarification claiming the “vast majority of people” won’t need to complete a face scan, or ID upload. Instead, Discord says its “age inference model” will silently classify most adults in the background. To translate; a machine learning system (like the ones that power ad personalization and many other “AI” products), will profile user behavior, which Discord will use to classify users & decide who needs to complete age verification.

Discord’s Damage Control

Less than 24 hours after announcing its global teen-by-default rollout, Discord was already in damage control mode. The company published a detailed safety blog, updated its original press release with an appended clarification and FAQ, and posted a tweet in an attempt to walk back the perception that all users would be forced into face scans or ID uploads.

The core message of the clarification: “Discord is not requiring everyone to complete a face scan or upload an ID to use Discord.” And: “the vast majority of people can continue using Discord exactly as they do today, without ever being asked to confirm their age.”

That sounds reassuring, as any PR-driven response would. Let’s understand the reality of this announcement.

What Discord Is Actually Saying

The clarification rests on a key distinction that was buried in the original announcement: Discord’s “age inference model.” This is a machine learning system that runs in the background and attempts to determine whether an account belongs to an adult based on behavioral signals. As Discord’s safety blog describes it, the model uses “patterns of user behavior and other signals associated with their account.”

Newsweek reported that these signals include account tenure, device type, and activity data. GameSpot noted that the model also uses metadata such as the types of games a user plays. PC Gamer observed that even if you avoid scanning your face or ID, your activity on the platform is still being analyzed. Discord’s own FAQ confirms the model does not use message content – but everything else about how you use the platform appears to be fair game.

In other words, Discord’s reassurance that “most users won’t need to verify” actually means: most users are already being profiled comprehensively.

Three Things Discord’s Clarification Doesn’t Address

1. The age inference model is behavioral surveillance.

Discord frames the inference model as a convenience; a way for adults to skip the verification step. But functionally, it is a system that continuously analyzes your behavior, your activity patterns, the games you play, your device, and your usage hours to build a profile and assign you to a demographic category. The Register pointed out that even a background inference model could be seen as a form of age verification and a degree of privacy intrusion, given Discord’s own privacy policy claims that user data is encrypted and designed to be inaccessible to employees. Whether you consented to this level of behavioral analysis when you signed up for a chat app is currently an unanswered question, deemed unimportant to recent press releases and media statements.

This also matters because Discord expanded its ad targeting and data collection policies just months ago, broadening the behavioral data it captures to power sponsored content and “Quests.” The same data signals being used to infer your age (activity patterns, server joins, time spent in channels, connected accounts) are probably the same signals now feeding Discord’s advertising engine. Discord’s clarification FAQ states that age assurance data won’t be used for ad targeting. But that distinction becomes meaningless when the underlying behavioral data already serves both purposes.

3. “Your identity is never associated with your account” is hard to square with reality.

Discord’s clarification makes a bold claim: “Discord only gets your age. That’s it. Your identity is never associated with your account.”

This may be technically true for the automated k-ID verification flow, where ID images are processed by a third party and only an age result is returned. But it was demonstrably not true for the manual age verification appeals that were breached in October 2025. In that incident, as we documented at the time, government IDs were stored alongside Discord usernames, emails, IP addresses, and support ticket conversations. This creates opportunities for direct identity-to-account mapping. As Cybernews reported, the breach involved an estimated 8.4 million support tickets, 520,000+ age verification tickets, and partial payment info for 580,000 users. And over 70,000 government IDs.

Discord says the vendor involved in October’s breach “was not involved” in the current age assurance system. That’s good. But the new system still includes manual appeals for users who are incorrectly classified as teens. That’s the same kind of fallback process that created the vulnerable data in the first place. Discord’s clarification doesn’t explain what happens to identity data during these appeals, or which vendor handles them now. The say any associated data will be deleted “quickly”, but history tells a different story.

3. The fallback path still funnels users toward ID submission.

Discord’s clarification emphasizes that face scans and ID uploads are only required “in a minority of cases when additional confirmation is required.” But “minority” is doing a lot of work here when applied to a user base of 200+ million. Even if only 10% of users need manual verification, that’s 20 million face scans or ID submissions passing through third-party vendors.

And the funnel toward ID submission hasn’t changed. If the age inference model doesn’t flag you as an adult, and the facial age estimation fails or returns an incorrect result, you’ll be asked to submit a government ID; the same documents that were compromised four months ago. As Biometric Update reported during the October breach, it was precisely these escalation flows that generated the leaked data. The automated system wasn’t breached; the manual backup was.

Discord’s own FAQ acknowledges this reality: “Users may be asked to use multiple methods only when more information is needed.” For users who look young, have new accounts, or use Discord in ways the inference model doesn’t recognize as “adult behavior,” the road leads back to ID or biometrics.

The Bigger Problem: You’re Being Profiled Either Way

Discord’s clarification is designed to make users feel better about the March rollout. And in fairness, the distinction matters; if the inference model works as described, most long-standing adult users probably won’t be prompted to scan their face or upload ID. That’s genuinely better than the universal verification the original announcement implied.

But the framing creates a false binary. The choice isn’t between “verification” and “no verification.” It’s between explicit identity collection (face scan, government ID) and silent behavioral profiling (the inference model analyzing everything you do on the platform). In both cases, Discord is building a richer picture of who you are and how you use the platform. You’re being told to pick your poison.

Combined with Discord’s expanded ad targeting, the repeated scraping of public server messages, and the Zendesk breach, the pattern is clear: the amount of data Discord holds about each user is growing rapidly; and the track record of keeping that data safe is, to put it generously, mixed.

What You Should Do

Our advice from yesterday’s post remains the same, but the clarification adds one more consideration:

Understand the inference model. If you don’t verify manually, Discord is using your behavioral data to assign you an age group. This means the games you play, the servers you join, your activity hours, and your device data are all being processed by a machine learning model. Whether you consider that acceptable depends on how you feel about silent profiling; but you should at least know it’s happening.

Clean up your footprint. The inference model uses “patterns of behavior and signals associated with your account” to estimate your age. Your message history in public servers, your server memberships, and your activity data all feed this system; and are also exactly the kind of data that has been scraped en masse by third parties. Cleaning up old messages and leaving servers you no longer use doesn’t just protect you from scrapers; it limits the data feeding Discord’s internal profiling systems too.

Don’t let the clarification create a false sense of security. Discord’s walk-back sounds comforting, but the underlying system hasn’t changed. Everyone is still in teen-by-default mode. Everyone is still being profiled by the inference model. And the fallback path for anyone the model can’t classify still leads to face scans or ID uploads, handled by third-party vendors, four months after the last third-party vendor was breached.

How Redact Can Help

Whether Discord classifies you through an algorithm or a face scan, the data on your account is what makes you a target when things go wrong. A smaller footprint means less to scrape, less to infer from, and less to lose in a breach.

Redact lets you bulk delete messages on Discord, including posts in public servers and DMs. Filter by keyword, channel, or date range. Set up automated recurring deletions so old messages don’t accumulate. And clean up across 25+ other platforms while you’re at it.

You can try Redact free for deletions on Discord, Twitter, Facebook, and Reddit.