Can Google Actually Read Your Emails? (2025 Verdict)

Categories: AI, Data Privacy, Email, Google

Last week, headlines exploded with claims that Gmail was scanning your emails and attachments to train Google’s AI models – unless you opted out.

Then Malwarebytes, one of the most widely cited sources, issued a correction.

So, what happened?

Not a sudden privacy invasion. Not brand-new AI training behavior.

Instead: a perfect storm of misleading UI, vague wording, and overlapping data-use settings that even security professionals misinterpreted.

This isn’t a story about a Google quietly implementing a new AI training source – its about how they communicate with customers.

In Short:

- Gmail didn’t suddenly start reading your emails for Gemini training

- It has always scanned inbox content for “smart features” (categorization, suggestions, recommendations)

- Malwarebytes misread a new prompt because Google’s UX is confusing and poorly communicated

- If privacy experts can’t parse the settings, normal users have no chance

- This incident shows how broken “informed consent” is in big tech ecosystems

- The fix isn’t another toggle – it’s data minimisation and control, including deleting data trails outside Google

1. What Actually Happened?

Malwarebytes published (and then corrected) a post claiming Gmail was training Google’s AI models on inbox content unless users opted out via a new toggle.

But after rechecking the wording, they clarified:

- The feature wasn’t new

- The data-use wasn’t new

- What was new: a newly-worded prompt related to Gmail’s “smart features” that looked at first glance like a broad AI-training opt-in

This was enough to spark days of viral headlines.

Why?

Because Google’s privacy interface is so fragmented that even security vendors misread it.

2. Gmail’s ‘Smart Features’ Already Scan Your Emails – And Always Have

The reality: Gmail has been analyzing your inbox for over a decade.

Google uses email content (when these toggles are on) to power features like:

- Auto-categorised tabs

- Smart Compose / Smart Reply

- Drive and Calendar suggestions pulled from emails

- “Important” and priority inbox ranking

- Activity signals used to improve these features

- Workspace cross-product personalisation

This is not the same as “training general-purpose AI models like Gemini on your inbox,” but it is akin to deep, ongoing content analysis.

And it’s bundled under perhaps the most misleading UX label in tech: Smart Features.

It’s like calling a data mining feature “Very Good Mode.”

You’d be a fool not to enable Very Good Mode!

/s

3. If Security Researchers Can Misread Google’s Settings, What Chance Does the Average User Have?

Here’s the part everyone is missing:

The incident didn’t reveal a new privacy invasion. It revealed that privacy controls are so unclear that even experts (such as Malwarebytes) can’t correctly interpret them on first pass.

And that’s the real harm.

When users don’t understand how consent works, consent loses its meaning.

Ask yourself:

- Is a toggle meaningful if you don’t understand what it controls?

- Is it informed consent if the same data-use pattern lives under three different settings pages?

- Is it transparency if your inbox powers product improvement buried under “smart features”?

This is “consent”: data sharing, presented as a UX checkbox for a ‘helpful feature’, not a privacy decision.

4. Google’s Design Makes Confusion Inevitable

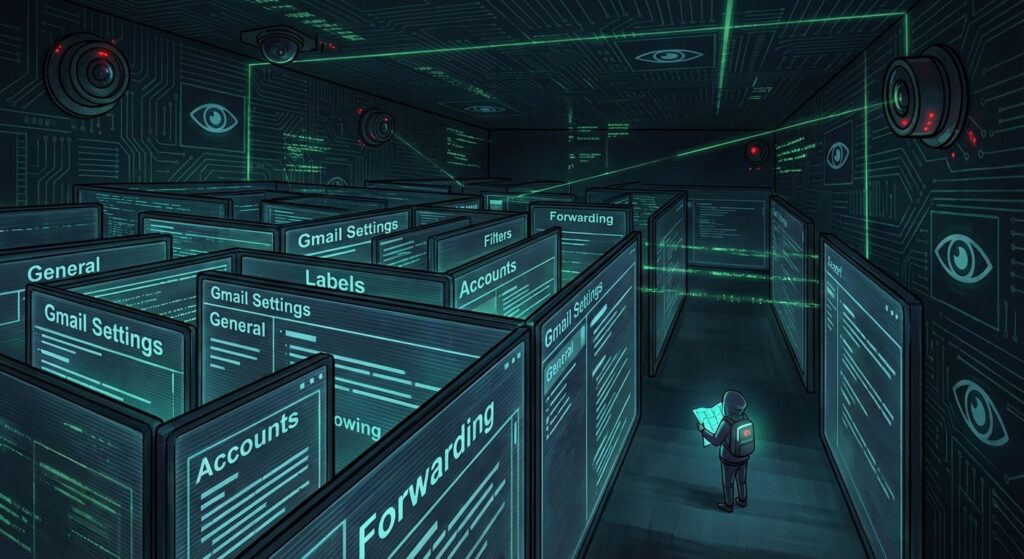

Google’s ecosystem is littered with overlapping controls:

- Gmail “Smart Features”

- Workspace “Smart Features & Personalization” (Google)

- Web & App Activity

- Gemini data settings

- Ad Personalization

- Cross-product personalization

Individually, each toggle has a description that sounds safe. Collectively? They form a maze of plausible deniability, where the real data flows are visible only to Google attorneys.

This is exactly why Malwarebytes – a security company – misinterpreted the new wording.

If the experts are confused, users aren’t consenting. They’re guessing.

5. The Bigger Problem: Normalized Surveillance

What makes this story worth telling isn’t the temporary misunderstanding.

It’s the reality beneath it:

Normalize the idea that your inbox is fair game for machine analysis, as long as it’s labeled “helpful.”

The correction doesn’t change that. If anything, it reinforces it.

Google didn’t suddenly become creepy.

The creepiness has been there for years – we just stopped noticing.

6. How to Actually Lock Down Gmail (and What You Can’t Stop)

Here’s what users can do today:

Turn off Gmail smart features

Settings → See all settings → General → Smart features.

Disable Smart Features and Personalisation.

Turn off Workspace Smart Features & Cross-Product Personalization

Google Account / Workspace Admin → Data & Privacy

Disable “Smart Features & Personalisation in Other Google Products.”

Turn off Web & App Activity

If you’re an Android user, consider disabling this setting. This reduces data sent for product improvement signals.

What you cannot disable

Even with everything off:

- Google still scans for spam and abuse

- Metadata is still logged

- Google still has historical data from years of “smart features” being on

- Google still has full access to email contents when you use Workspace features

Which brings us to the next point.

7. You Can’t Fix Google’s Data Appetite. You Can Fix Your Data Footprint.

At Redact.dev, we think about privacy in a way tech companies don’t want you to:

You can’t meaningfully control a platform’s data practices when those practices are built into the architecture. The only thing you can control is the amount of data that exists about you in the first place.

Gmail scanning is one part of the equation.

The other part is everything your email address connects to:

- Old accounts

- Breached profiles

- Tracking-heavy social media

- Data brokers

- Identity graphs

- Auto-imported contacts

- Apps you forgot you authorized

- Services quietly syncing your data

Reducing that surface area is how you regain privacy power – and that is exactly what Redact.dev gives people the ability to do.

8. The Gmail AI Panic Proves One Thing: Privacy Settings Are Not Enough

People panicked because a UI change looked like a new AI invasion. They calmed down because a correction said it wasn’t new.

Both sides miss the actual problem:

The system is designed so that ordinary people can’t possibly understand how their data is used.

That’s not an accident – it’s a business model.

Whenever you hear “smart features,” read: We use your data in ways you don’t fully understand.

9. Final Takeaway: Don’t Let Confusing Settings Decide Your Privacy

Gmail didn’t suddenly open your inbox to Gemini. But the fact that so many thought it did exposes how fragile user understanding is – and how much power companies have when privacy is opt-out, hidden, or softened with cute labels.

If the average user can’t parse the settings, then the settings are not real control.

Real control is data minimization, reversibility, and reducing your footprint across the internet.

That’s where Redact.dev comes in – the world’s largest digital footprint management tool. With Redact, you can mass delete emails from Gmail & other email platforms – and ~30 other social media platforms. You can try Redact for free on Facebook, Discord, Twitter, and Reddit.